Generative AI and ChatGPT – the end of thinking (for ourselves)?

Munich, 22 March 2023

Since the release of ChatGPT by OpenAI in November 2022, many curious people have played around with this feat of technology. Be it to write an essay or devise a fitness plan. acatech President Jan Wörner opened acatech am Dienstag on 14 March by recounting his own endeavours with the programme, and showed ChatGPT’s incredibly compelling description of what acatech is. A poem about acatech written by ChatGPT brought a smile to the faces in the audience. When asked who the current acatech President is, however, the AI’s answer was quite wrong. This shows clearly that while ChatGPT has great potential the results must be questioned.

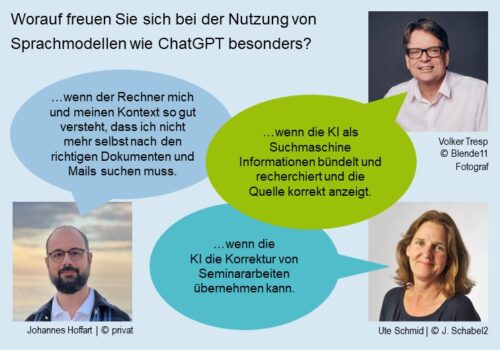

Volker Tresp, Professor for Machine Learning in Computer Science at LMU Munich and member of Plattform Lernende Systeme (PLS), outlined the underlying technology to provide an understanding of generative AI, especially in relation to language models such as ChatGPT. ChatGPT is based on the language model GPT-3 (Generative Pre-Trained Transformer), which is an AI algorithm that has learned to predict the next word based on the preceding words and sentences. What is new about ChatGPT compared with its predecessors is the fact that the training process includes levels of supervised learning, a reward model and reinforcement learning. This probabilistic process also exposes the current limits of the technology: it always gives the answer with the greatest probability (even if it is incorrect); it sometimes makes up content or sources (“hallucination”); and the cut-off for its knowledge is 2021. This means that the model does not continue to learn online. Volker Tresp summed up that ChatGPT will have a huge influence on developments in coming years and will turn out to be a game-changer.

Current public debate about ChatGPT often includes talk of banning the technology, as the AI can now simply be prompted to complete homework and exam assignments. PLS member Ute Schmid, head of the Chair of Cognitive Systems at University of Bamberg weighed in on this point. In her opinion, there is not much point in banning novel technology. Rather, it is important to utilise technology to enhance and further human skills. That said, Ute Schmid also highlighted the problems that ChatGPT entails for education. Unlike on websites, the output is produced without context, so it is difficult to tell how credible and reliable the results are. In many areas, 90 per cent of the available content must be taken with a pinch of salt, said Ute Schmid. She also warns of the danger that the abilities that users require to structure complex problems will regress. Ute Schmid sees the potential for ChatGPT to lighten the workload of learners as an opportunity. Because the AI could do repetitive tasks for them, learners would have more time and scope to develop a deeper understanding and become better trained in solving complex problems. Ideally, this would also free up time for teachers, who could invest that time in interacting with their classes: which would be a major help considering the growing shortage of teaching staff. In conclusion, Ute Schmid emphasised how important she thought it was to use the discussion about ChatGPT to think about education in a new way. It is high time for a rethink, she said, given the ready availability of digital content: independent thinking, critical analysis and transfer are the key skills here.

In the corporate environment, too, major changes can be expected: Johannes Hoffart, CTO of the AI Unit at SAP SE, gave an overview of potential applications in companies. He himself is still very impressed with and surprised at how successful ChatGPT’s understanding of context is, yet there is no question of it being “the end of thinking for ourselves”. Rather, he said, it is a tool to be utilised. Generative AI could be used in business applications, such as in digital assistants. For example, diffuse information can be compiled in a specific way faster than ever before. Also, structured documents can automatically be analysed in a specific way without having to search through large documents individually. However, the function that Johannes Hoffart sees as having the greatest potential for disruption is the generating of programme code. Writing code based on prompts and having it verified by running checks already works well. Going forward, it is likely that humans will produce hardly any original code any more. Instead, in the future, humans will be able to realise more complex software requirements, such as improving interaction between different operating systems using automated coding. Humans will then test the interoperability achieved. He also said that the purpose of generative AI is to support human-computer interaction.

In the closing discussion, a few points from the speeches were revisited. For example, the question of how generated content could reliably be recognised as such. Volker Tresp and Ute Schmid answered that a technical solution to recognise such texts was a possibility but, at the same time, that research on this was urgently required, as online learning is associated with a risk of more and more fake news. Some audience members also wondered about the handling of the technology impact assessment. The technology has recently been made available to the general public without assessing the impact on humans. In relation to this, Ute Schmid reiterated that the key consideration when it comes to technologies is human development, so that appropriate skills are acquired. ChatGPT’s ability to understand independently is often disputed, but Johannes Hoffart pointed out that at least in terms of programming code, we can say that it does in fact have understanding.

Jan Wörner summed up by saying that he currently sees ChatGPT as a helpful tool for “rough” ideas and amusing creations. For serious research, however, he recommends relying on verified sources for now.

Lastly, moderator Birgit Obermeier, PLS office, asked which specific applications of language models like ChatGPT the panellists are looking forward to.